Who Maketh the Cloud His Chariot. Part I

Who Maketh the Cloud His Chariot. Part I

In the beginning Alan Turing created the Turing machine. But the Turing machine was without form, and the mind of Turing moved upon its blueprints.

And Konrad Zuse said: “Let there be computers.†And there was Z3, the first programmable computer. And Zuse saw Z3, that it was good; and Zuse divided programming from engineering, and created the first high-level programming language and called it Plankalkül.

Z3: The First Computer in the World

And John Mauchly and J. Presper Eckert created ENIAC, the first general-purpose computer. And Z3 and ENIAC were the harbingers of the first computer era.

And Chuck Peddle from Commodore International said: “Let there be personal computers and let they divide the home computers from the mainframe computers.†And Chuck Peddle made Commodore PET and divided the personal computers from the mainframe computers: and it was so.

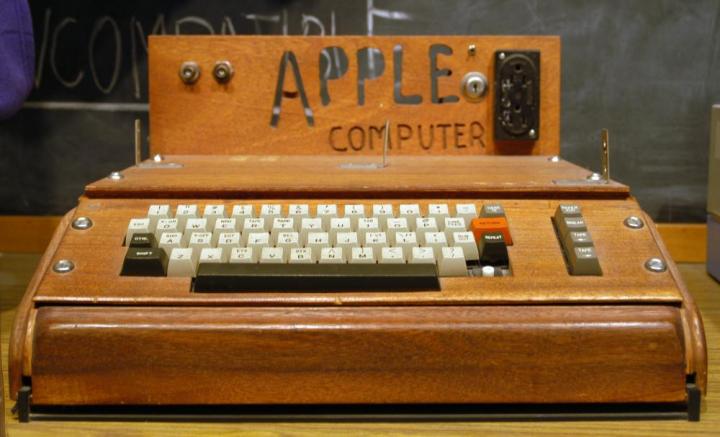

And Steve Jobs (Peace be upon him) and Steve Wozniak said: “Let their be user-friendly personal computers,“ and created Apple and Macintosh. And their personal computers were called Macs, and the personal computers by IBM were called PCs: And the both companies saw that it was good.

And Bill Gates said: let the PCs be multi-tasking, and have a graphical interface, and run applications in windows.

And the PCs started being multi-tasking, and having graphical interfaces, and run applications in windows: and it was called Windows; and Bill Gates saw that it was... not so bad.

And Macintosh, PC, and Windows were the harbingers of the second computer era.

***

Enough of the epic narratives. Let us take a more light-hearted tone as we proceed to describe the presumable onset of the Third Computer Era that the late Steve Jobs referred to as the Post-PC Age. The adherents of his point of view (their name is Legion: for they are many) claim that we're going to witness one of the major breakthroughs in the IT-industry since Alan Turing modeled his eponymous machine.

Their point is that the PC, the driving force behind the computer industry development since the late 1970-s, is about to lose its special status among the electronic devices. It used to be the core of our device central, the axis, on which all of our computer experience used to rotate; nowadays it is merely primus inter pares, just one of the many devices we use in our lives. The reason for the rapid downfall of the whilom IT-autocrat is the emergence of cloud computing.

Cloud Computing: That’s Roughly How It Works

Cloud Computing: That’s Roughly How It Works

If by any chance you are still unaware of the existence of cloud computing, I'll try to outline a brief explanation of this concept. In short, it's delivery of service rather than products onto your computer. It means that you acquire software, resources, etc., just in the same way as you get electricity or telephony. Your network (in the overwhelming majority of cases it will be the Internet) will serve as the transmission medium between your service provider and you. As a result, everything you would do on your cloud-powered machine would be running a program hosted and on a remote server. Meanwhile, the software itself and your files will be stored on a hard drive that can be thousand of miles away from you and won't affect the performance rates of your computer at all.

If you think it over, you'll see that a web-browser is virtually the sole program you need on a cloud-powered computer. With its help you can connect to the service-provider and run the desired program without using almost any of your computer resources at all. In effect, it means that you can process huge amounts of data on your machine without a single spike in the System Monitor's CPU load graphics.

Let us try to be visionaries for a while. I bet most of you would agree with me that cloud computing is destined to do away with the desktops and the system blocks in particular. Instead we'll get a world permeated with the Web, where our files will be retrieved literally from thin air. No more all-pervasive wires, no more noise from loads of coolers inside our system blocks, no more nerd dens with zillions of devices around the monitor, which is lonely glowing in the deep dark night.

The first who came up with the idea of cloud computing was the Canadian technologist Douglas F. Parkhill. In his groundbreaking book The Challenge of the Computer Utility, published in 1966, he thoroughly explored all the minutiae of the then hypothetical cloud network. However, this idea remained pure fiction until the early 2000-s. Back in these turbulent years the dot-com bubble (a large speculative unconscious machination on the market at the end of the last millennium) popped, causing massive devastation of the Internet economy sector. It made most investors turn their backs on the IT-industry, so the newly appeared IT-companies had to look for new ways to deliver their services to the end-consumers in order to attract new investments. A somber mood prevailed among the developers.

The cloud computing became one of the day-savers, which showed the desperate IT-entrepreneurs new prospects. The technology was pioneered by Amazon, which used it to modernize their data centers: the largest on-line retailer in the world didn't want to put up with the fact that its servers were using not more than 10% of their capacity at the time. Jeff Bezos' team proved to be right, as the implementation of cloud computing in data centers led to a staggering increase in their servers' performance. This put an amazing idea into the Amazon CEO's head: “Why don't we commercialize this thing?â€

That's how Amazon Web Services, the first cloud computing platform, came about in 2002.

Its launch made the way for dozens of similar projects in the upcoming years.

But that's a completely different story.